Who can we trust for software?

Thu 15 September 2016

TL; DR We need to figure out how to guarantee that software can be trusted

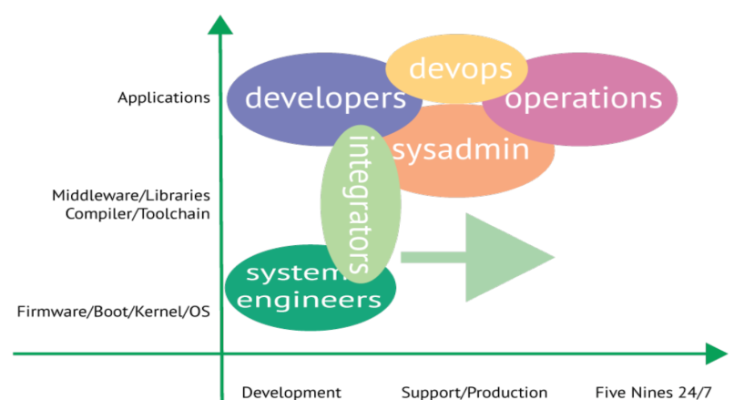

In the over-simple graphic above, I've tried to map some of the key technical roles being performed in the delivery and maintenance of complex systems and services. I'm struggling to work out who precisely sits in the bottom right corner, but I strongly believe we (i.e. everybody) need these people to exist, and we need to trust their work.

Trust is a difficult and complex topic, and sadly it feels like there is more evidence every day that people don't care enough about it.

Some politicians now seem happy to literally and blatantly disregard the facts, as opposed to just hiding or manipulating them. And some scientists are not much better, as the latest expose on the murky past of the sugar industry shows.

I can't make any strong claims about the ethics of software people in general, but in my direct experience the folks I've worked with have tended to be honest about their work, and I value that highly.

Engineers may argue and complain a lot, make mistakes, and be completely incapable of estimating how long things will take, of course :-) But normally technical people don't set out to deliberately do the wrong thing and lie about it, unless they are pressured or incentivised to do so.

I struggle to believe that "evil software engineers" were the root cause for the vehicle emissions cheating scandal.

And when software goes wrong, I would default to blaming inexperience, confusion and incompetence, rather than conspiracy.

But obviously there are bad actors in software, and their influence has become much more visible over recent years.

Trouble is, most people don't seem to care very much about the risks until they are impacted directly. We're mostly happy to

- shovel our personal info and passwords into Google, Facebook, LinkedIn et al

- share our most private moments and conversations via phones and websites

- use online banks and retailers, and carry wireless electronic cards so we can be robbed-over-the-air without even the need to pick our pockets

- travel far, at great speeds, in metal boxes that are entirely operated via software.

I think the big metal boxes will be the tipping point.

Now that we're connecting all the metal boxes to the network, people are finally going to have to wake up.

As far as I know no-one has died as a result of hacks to cars or aeroplanes so far, but it's bound to happen. And when it does, the media will be all over the tech community.

"How could you let this happen?" and "What (TF) are you going to do to fix it?"

I'm expecting some seismic changes as a result:

- A clear separation between 'developers' who just want/need to build apps and services on top of infrastructure software which they can assume to be trustable, and the people who commit to producing trustable infrastructure code

- A realisation that trustability is harder (maybe impossible) to achieve without giving everyone access to the source code.

- A lot of attention on how to verify, in public, that the infrastructure software really is trustable.

- As a result of the above, a lot more formal verifiability and verification of open source software.

And then hopefully we'll all know who we can trust to guarantee that software is what it appears to be, and does what it claims to do.

Reposted from LinkedIn